By encoding a feature of biological intelligence called reinforcement learning, in which we iteratively learn from successes and failures, “deep neural networks” (DNNs) have revolutionized artificial intelligence with spectacular demonstrations of mastery in Chess and Go. But they struggle to deal with the real-world problems encountered daily by humans and other animals. A new collaboration based at MIT posits that a fundamental shortcoming of deep neural networks is that they are merely neural. The team aims to prove DNNs could become much more powerful by integrating another brain cell type: astrocytes.

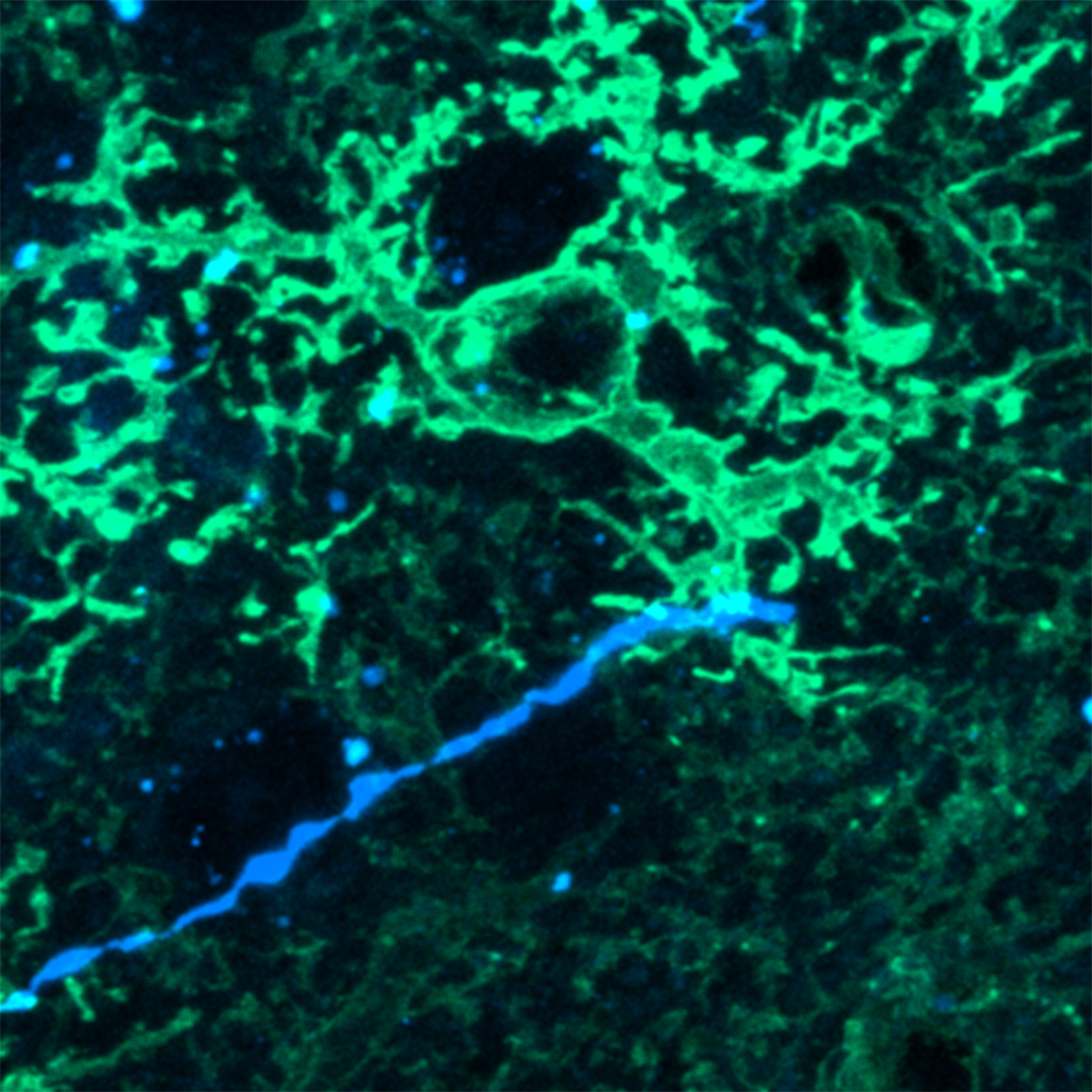

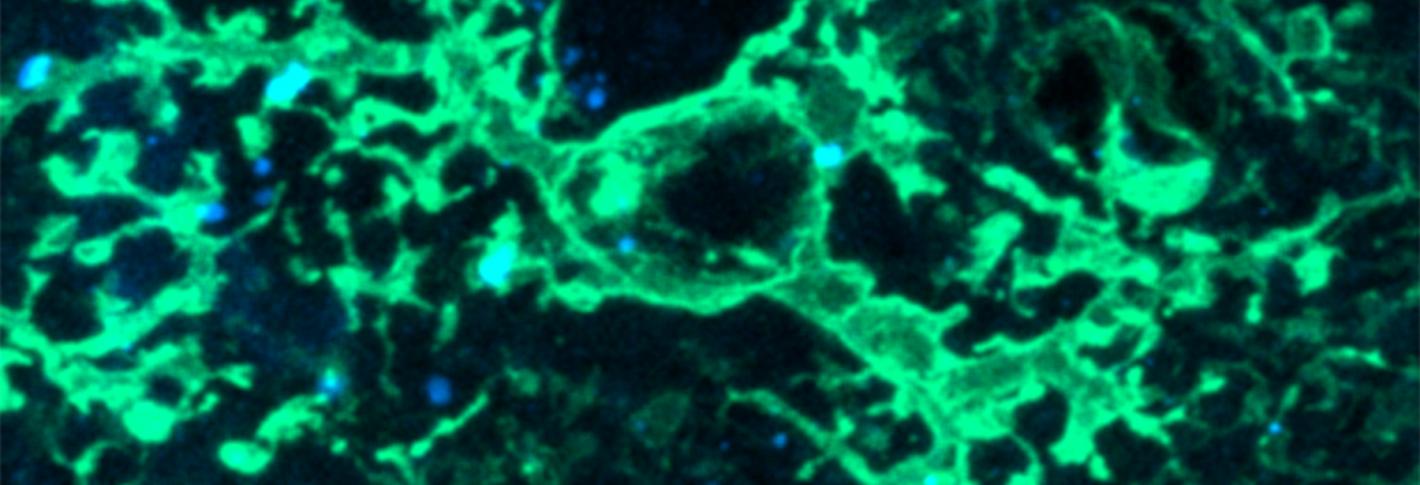

“Too much emphasis on neurons has removed any analysis of the role of these cells that are 50 percent of brain cells,” said Mriganka Sur, Newton Professor of Neuroscience in The Picower Institute for Learning and Memory at MIT and leader of the project funded by the U.S. Army with up to $6.5 million over five years via a Multidiscipinary University Research Initiative grant. The team includes four faculty members in MIT’s Departments of Brain and Cognitive Sciences (BCS) and Electrical Engineering and Computer Science (EECS), as well as professors at California Institute of Technology and the University of Minnesota.

Given games where the rules and context never vary, time is irrelevant, and all the information about the state of the game is always apparent on the board, current state-of-the-art DNNs can remain woefully inefficient or outright oblivious to all kinds of factors that people and animals routinely must consider, Sur said. These include how to balance exploring an uncertain situation with exploiting it to advance toward the goal; how to keep track over time of which steps eventually prove crucial for success; and how to extract and transfer knowledge of those key steps for application in unexpected but related contexts.

There is growing evidence, Sur said, that astrocytes endow biological brains with these capabilities by acting as a parallel network overlaying that of neurons. The computational refinements this brings include integrating information over long time scales, selectively modulating key connections between neurons called synapses, and providing an infrastructure in which key actions most closely associated with reward – even if it takes several more steps to achieve– can be recognized and repurposed. Astrocytes are key links in coordinating a process by which chemicals called neuromodulators guide neurons in the brain’s prefrontal cortex to carry out exploration and guide cells in the brain’s striatum to execute exploitation.

The collaboration will investigate the hypothesis that integrating astrocytes into DNNs can radically enhance their efficiency and performance. Collaborators Pulkit Agrawal, assistant professor in EECS, and Alexander Rakhlin, associate professor in BCS, will spearhead efforts to integrate astrocytes into reinforcement learning theory. John O’Doherty, professor of psychology at Caltech, will lead experiments to test theoretical predictions by monitoring brain imaging in humans as they breeze through tasks that confound current DNNs. Ann Graybiel, Institute Professor in BCS, will do so in mice, where she studies reinforcement learning and its roots in the striatum. Meanwhile Sur and Alfonoso Araque at Minnesota will measure how astrocytes work in brain circuits of mice as they learn, and even manipulate the cells to see how that changes reinforcement learning performance. Experimental results will then help refine the group’s theory.

For instance, humans will play a video game where they have to collect coins, but cannot do so without first finding a bucket. The bucket will frequently fill, requiring them to deposit collected coins in a bank before they can then gather more coins. People won’t take long to figure out how to recognize and navigate these shifting contexts, but a DNN should struggle mightily. Via such tasks, and simplified ones in mice, the team expects to see how brain regions and the astrocytes and neuromodulators within make key differences.

“Our central hypothesis is that interaction of astrocytes with neurons and neuromodulators is source of computational prowess that enables the brain to naturally perform reward learning and overcome many problems associated with state-of-the-art reinforcement learning (RL) systems,” the team wrote in their grant. “Astrocytes can integrate and modulate neuronal signals across diverse timescales ranging from synaptic activity to shifts in behavioral state and learning. Our project is a combined effort of advancing the theory for RL systems and advancing the neurobiology of astrocytic function, through a synergistic design of theory and experiments.”